This course is from edX, scroll down & check “Read More” for more informations.

About this course

Data is driving our world today. However, we hear about Data breaches and Identity thefts all the time. Trust on the Internet is broken, and it needs to be fixed. As such, it is imperative that we adopt a new approach to identity management, and ensure data security and user privacy through tamper-proof transactions and infrastructures.

Blockchain-based identity management is revolutionizing this space. The tools, libraries, and reusable components that come with the three open source Hyperledger projects, Aries, Indy and Ursa, provide a foundation for distributed applications built on authentic data using a distributed ledger, purpose-built for decentralized identity.

This course focuses on building applications on top of Hyperledger Aries components—the area where you, as a Self-Sovereign identity (SSI) application developer, can have the most impact. While you need a good understanding of Indy (and other ledger/verifiable credential technologies) and a basic idea of what Ursa (the underlying cryptography) is and does, Aries is where you need to dig deep.

What you’ll learn

- Understand the Hyperledger Aries architecture and its components.

- Discuss the DIDComm protocol for peer-to-peer messages.

- Deploy instances of Aries agents and establish a connection between two or more Aries agents.

- Create from scratch or extend Aries agents to add business logic.

- Understand the possibilities available through the implementation of Aries agents.

Welcome!

Introduction and Learning Objectives

Introduction

Welcome to LFS173x – Becoming a Hyperledger Aries Developer!

The three Hyperledger projects, Indy, Aries and Ursa, provide a foundation for distributed applications built on authentic data using a distributed ledger, purpose-built for decentralized identity. Together, they provide tools, libraries, and reusable components for creating and using independent digital identities rooted on blockchains or other distributed ledgers so that they are interoperable across administrative domains, applications, and any other “silo.” While this course will mention Indy and Ursa, its main focus will be Aries and the possibilities it brings for building applications on a solid digital foundation of trust. This focus will be explained further in the course but for now, rest assured: if you want to start developing applications that are identity focused and on the blockchain, this is where you need to be.

What You Will Learn

This course is designed to get students from the basics of Trust over IP (ToIP) and self-sovereign identity (SSI)—what you learned about in the last course (LFS172x – Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries & Ursa)—to developing code for issuing (and verifying) credentials with your own Aries agent.

Terminology

We use the terms blockchain, ledger, decentralized ledger technology (DLT) and decentralized ledger (DL) interchangeably in this course. While there are precise meanings of those terms in other contexts, in the context of the material covered in this course the differences are not meaningful.

For more definitions, take a look at our course Glossary section.

Labs and Demos

Throughout this course there will be labs and demos that are a key part of the content and that you are strongly advised to complete. The labs and demos are hosted on GitHub so that we can maintain them easily as the underlying technology evolves. Many will be short interactions between agents. In all cases, you will have access to the underlying code to dig into, run and alter.

For some labs, you won’t need anything on your laptop except your browser (and perhaps your mobile phone). For others, you have the option of running the labs in your browser or locally on your own system and we will provide instructions for using a service called Play with Docker. Play with Docker allows you to access a terminal command line environment in your browser so you don’t have to install everything locally. The downside of using Play with Docker is that you don’t have all the code locally to review and update in your local code editor.

When you run the labs locally, you need the following prerequisites installed on your system:

- A terminal command line interface running bash shell.

– This is built-in for Mac and Linux, and on Windows, the “git-bash” shell comes with git (see installation instructions below). - Docker, including Docker Compose – Community Edition is fine.

– If you do not already have Docker installed, open Docker Docs, “Supported Platforms” and then click the link for the installation instructions for your platform.

– Instructions for installing docker-compose for a variety of platforms can be found here. - Git

– This link provides installation instructions for Mac, Linux (including if you are running Linux using VirtualBox) and native Windows (without VirtualBox).

- A terminal command line interface running bash shell.

All of the labs that you can run locally use Docker. You can run the labs directly on your own system without Docker, but we don’t provide instructions for doing that, and we highly recommend you not try that until you have run through them with Docker. Because of the differences across systems, it’s difficult to provide universal instructions, and we’ve seen many developers spend too much time trying to get everything installed and working right.

The teams we work with only use Docker/containers for development and production deployment. In other cases, developers unfamiliar with (or not interested in) Docker set up their own native development environment. However, doing so is outside the scope of the labs in this course.

Acknowledgements

We have many, many people to thank for this course. The first and foremost is the Linux Foundation for allowing us to share what we know about this evolving and exciting technology. In particular, thank you Flavia and Magda for your guidance and expertise in getting this course online.

We would also like to thank the developers, visionaries and change-seekers in the Hyperledger Indy, Aries and Ursa world—the people we talk to on the weekly calls and daily rocketchats, the people we meet at conferences, and the people who are driving this technology on a daily basis to make the Internet a better place. We would especially like to thank Hyperledger contributors, the Sovrin Foundation, the BC Government VON team and Evernym for their contributions to this ecosystem. As well, a shout out goes to Akiff Manji (@amanji on GitHub) for contributing the Aries ACA-Py Controllers repository and for his updates to the Aries API demo. And to Hannah Curran for her keen editing eye.

While we have tried to be as accurate and timely as possible, these tools and libraries are changing rapidly. There are no doubt mistakes and we own them. Keeping this in mind, we have created a change log on GitHub to track course updates that are needed when mistakes are found in the content and when a major change or shift occurs within the Hyperledger Indy, Aries and Ursa space. If you find an error, a need for a content update, or something in one of the demos doesn’t work, please let us know via the GitHub repo.

Thank you for taking this course!

Chapter 1. Overview

Chapter Overview

Data breaches. Identity theft. Large companies exploiting our personal information. Trust on the Internet. We read about these Internet issues all the time. Simply put, the Internet is broken and it needs to be fixed.

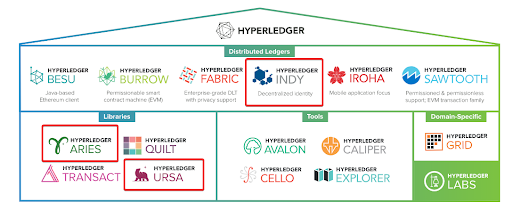

This is where the Hyperledger Indy, Aries and Ursa projects come in and, we assume, one of the main reasons you are taking this course. The Indy, Aries and Ursa tools, libraries, and reusable components provide a foundation for distributed applications to be built on authentic data using a distributed ledger, purpose-built for decentralized identity (refer to the following image).

The Hyperledger Frameworks and Tools

Licensed under CC BY 4.0

Note: This course is called Becoming a Hyperledger Aries Developer because it focuses on building applications on top of Hyperledger Aries components—the area where you, as an SSI application developer, can have the most impact. Aries builds on Indy and Ursa. While you need to have a good understanding of Indy (and other ledger/verifiable credential technologies) and a basic idea of what Ursa (the underlying cryptography) is and does, Aries is where you need to dig deep.

Learning Objectives

By the end of this chapter you should:

- Understand why this course focuses on Aries (and not Indy or Ursa).

- Understand the problems Aries is trying to fix.

- Know the core concepts behind self-sovereign identity.

Why Focus on Aries Development?

Hyperledger Indy, Aries and Ursa make it possible to:

- Establish a secure, private channel with another person, organization, or IoT thing—like authentication plus a virtual private network—but with no session and no login.

- Send and receive arbitrary messages with high security and privacy.

- Prove things about yourself; receive and validate proofs about other parties.

- Create an agent that proxies/represents you in the cloud or on edge devices.

- Manage your own identity:

– Authorize or revoke devices.

– Create, update and revoke keys.

However, you will have relatively little interaction with Indy, and almost none with Ursa, as the vast majority of those working with the Hyperledger identity solutions will build on top of Aries; only those contributing code directly into Indy, Aries and Ursa (e.g. fixing a flaw in a crypto algorithm implementation) will have significant interaction with Indy and Ursa. And here’s another big takeaway: while all three projects are focused on decentralized identity, Indy is a specific blockchain, whereas Aries is blockchain-agnostic.

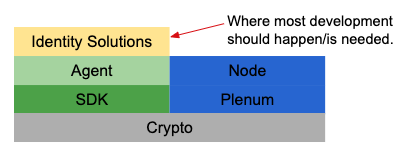

Where Most Development Happens

Licensed under CC BY 4.0

Why We Need Identity Solutions

In today’s world, we are issued credentials as documents (for example, our driver’s license). When we need to prove who we are, we hand over the document. The verifier looks at the document and attempts to ascertain whether it is valid. The holder of the document cannot choose to only hand over a certain piece of the document but must hand over the entire thing.

A typical paper credential, such as a driver’s license, is issued by a government authority (an issuer) after you prove to them who you are (usually in person using your passport or birth certificate) and that you are qualified to drive. You then hold this credential (usually in your wallet) and can use it elsewhere whenever you want—for example, when renting a car, in a bank to open up an account or in a bar to prove that you are old enough to drink. When you do that you’ve proven (or presented) the credential. That’s the paper credential model.

Examples of Paper Credentials

By Peter Stokyo

Licensed under CC BY 4.0

The paper credential model (ideally) proves:

- Who issued the credential.

- Who holds the credential.

- The claims have not been altered.

The caveat “ideally” is included because of the possibility of forgery in the use of paper credentials. As many university students know, it’s pretty easy to get a fake driver’s license that can be used when needed to “prove” those same things.

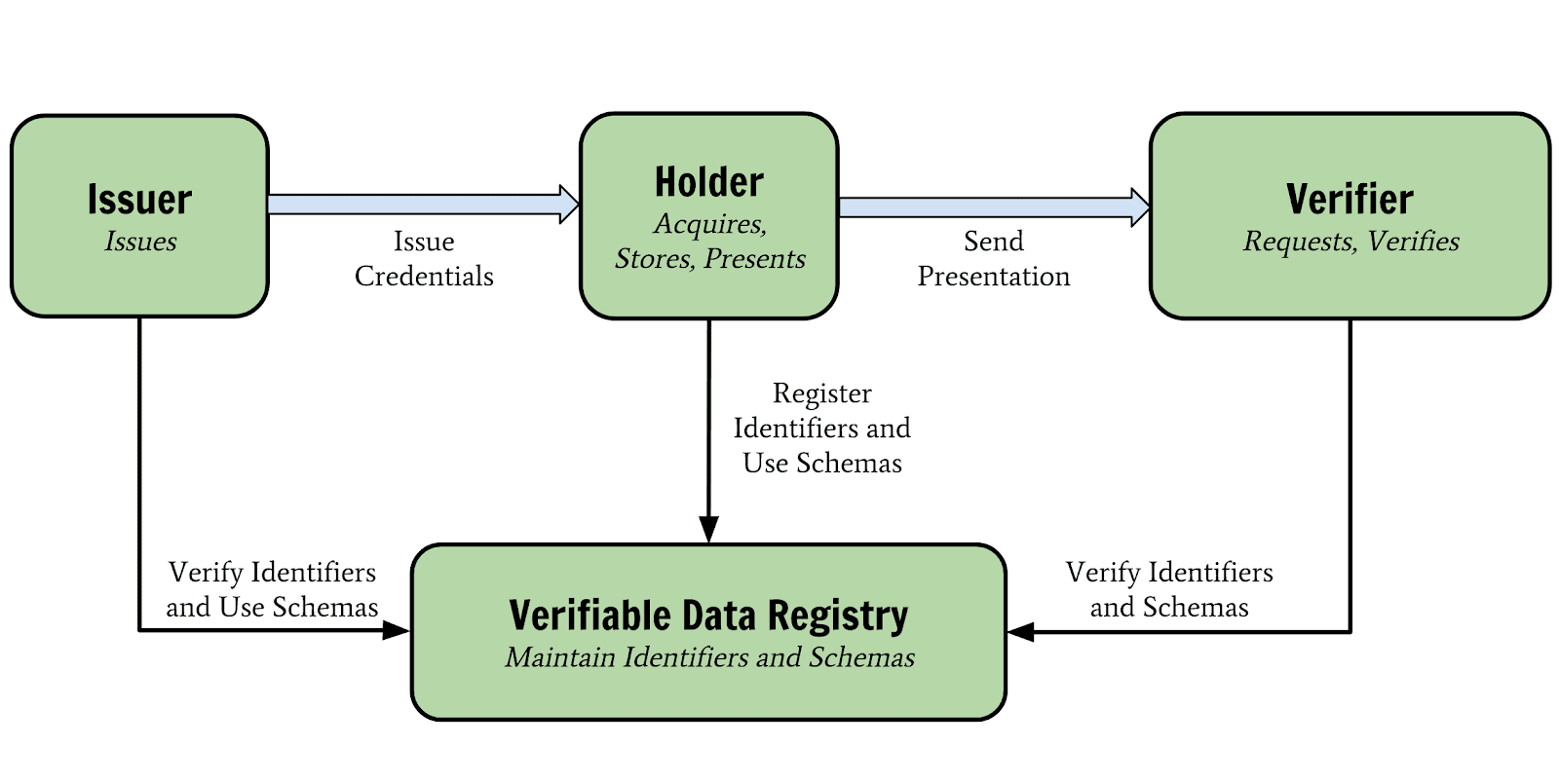

The Verifiable Credential (VC) Model

Enter the VC model, the bread and butter behind decentralized identity, which brings about the possibility of building applications with a solid digital trust foundation.

The verifiable credentials model is the digital version of the paper credentials model. That is:

- An authority decides you are eligible to receive a credential and issues you one.

- You hold your credential in your (digital) wallet.

- At some point, you are asked to prove the claims from the credential.

- You provide a verifiable presentation to the verifier, proving the same things as with a paper credential.

Plus,

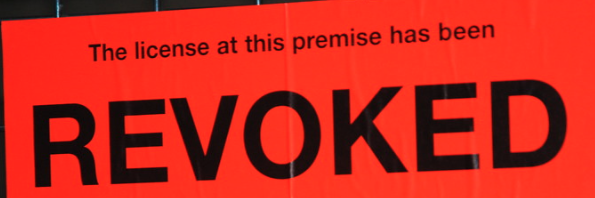

- You can prove one more thing—that the issued credential has not been revoked.

As we’ll see, verifiable credentials and presentations are not simple documents that anyone can create and use. They are cryptographically constructed so that a presentation proves the four key attributes of all credentials:

- Who issued the credential.

- The credential was issued to the entity presenting it.

- The claims were not tampered with.

- The credential has not been revoked.

Unlike a paper credential, those four attributes are evaluated not based on the judgment and expertise of the person looking at the credential, but rather online using cryptographic algorithms that are extremely difficult to forge. When a verifier receives a presentation from a holder, they use information from a blockchain (shown as the verifiable data registry in the image below) to perform the cryptographic calculations necessary to prove the four attributes. Forgeries become much (MUCH!) harder with verifiable credentials!

The W3C Verifiable Credentials Model

Licensed under CC BY 4.0

The prerequisite course, LFS172x – Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries and Ursa, more than covers the reasons why we need a better identity model on the Internet so we won’t go into it too much here. Suffice to say, blockchain has enabled a better way to build solutions and will enable a more trusted Internet.

Key Concepts

Let’s review other key concepts that you’ll need for this course, such as:

- self-sovereign identity

- trust over IP

- decentralized identifiers

- zero-knowledge proof

- selective disclosure

- wallet

- agent

If you are not familiar or comfortable with these concepts and terminology, we suggest you refresh yourself with this course: LFS172x – Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries and Ursa.

Self-Sovereign Identity (SSI)

Self-sovereign identity is one of the most important concepts discussed in the prerequisite course and it is what you should keep in mind at all times as you dig deep into the Aries world of development. SSI is the idea that you control your own data and you control when and how it is provided to others; when it is shared, it is done so in a trusted way. With SSI, there is no central authority holding your data that passes it on to others upon request. And because of the underlying cryptography and blockchain technology, SSI means that you can present claims about your identity and others can verify it with cryptographic certainty.

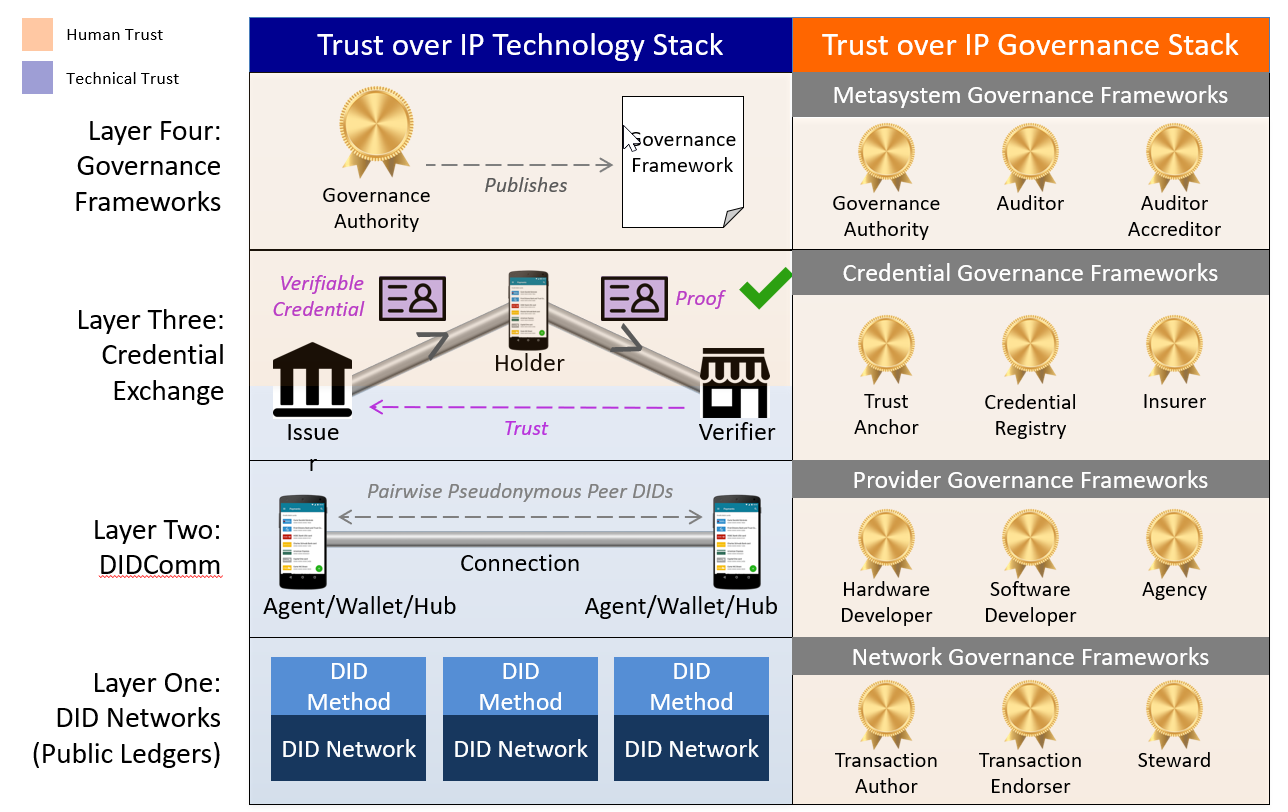

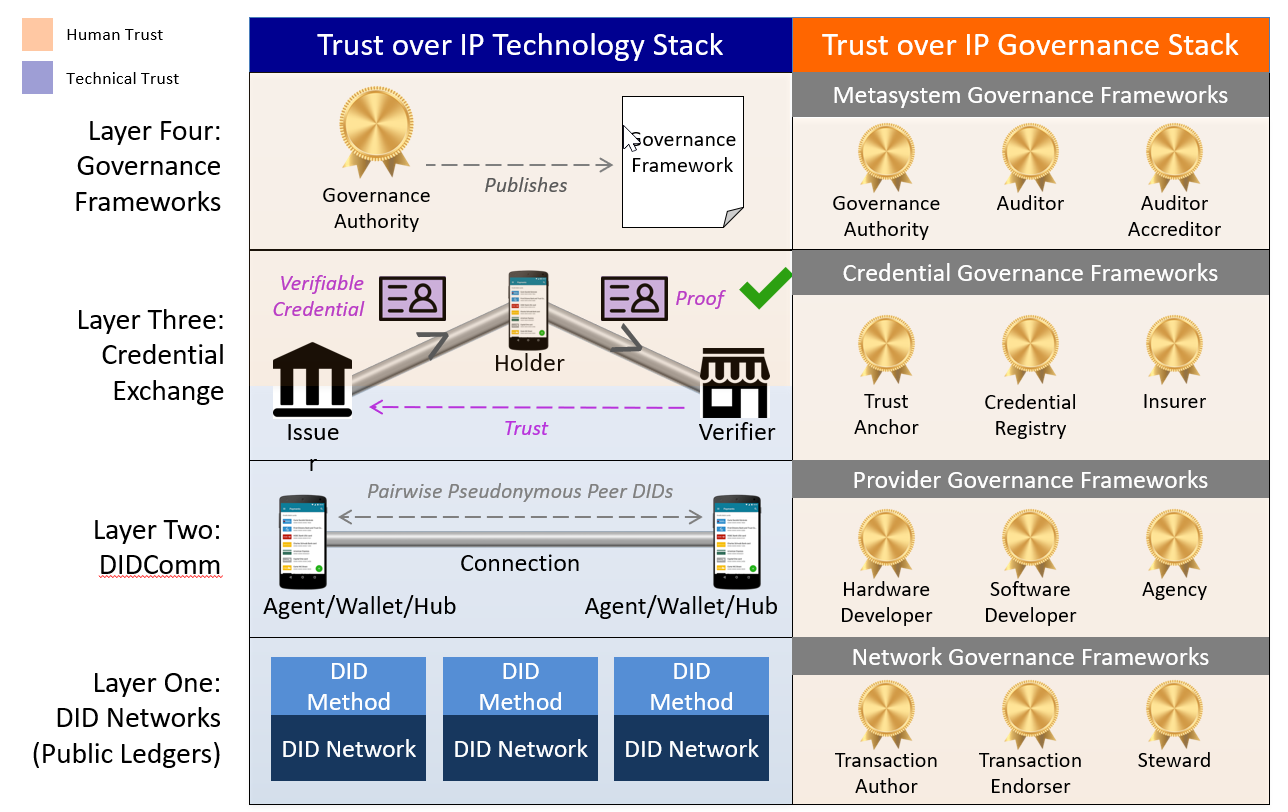

Trust Over IP (ToIP)

Along with SSI, another term you will hear is trust over IP (ToIP). ToIP is a set of protocols being developed to enable a layer of trust on the Internet, protocols embodied in Indy, Aries and Ursa. It includes self-sovereign identity in that it covers identity, but goes beyond that to cover any type of authentic data. Authentic data in this context is data that is not necessarily a credential (attributes about an entity) but is managed as a credential and offers the same guarantees when proven. ToIP is defined by the “Trust over IP Technology Stack,” as represented in this image from Drummond Reed:

Trust Over IP (ToIP) Technology Stack

Licensed under CC BY 4.0

The core of Aries implements Layer Two (DIDComm) of the ToIP stack, enabling both Layer One (DIDs) and Layer Three (Credential Exchange) capabilities. Hyperledger Indy provides both a DID method and a verifiable credential exchange model. Aries is intended to work with Indy and other Layer One and Layer Three implementations. Aries implements DIDComm, a secure, transport-agnostic mechanism for sending messages based on DIDs.

Unit

A decentralized identifier is like a universally unique identifier (uuid) for your identity. DIDs are 128-bit numbers written in Base58:

did:sov:AKRMugEbG3ez24K2xnqqrm

Some things you should know about DIDs:

- A DID is controlled by one or more Ed25519 public/private key pairs. A public key is called a “verkey” (verification key); private key is called a “signing key.”

- DIDs can be created on many different blockchains; right now, Indy only supports Sovrin-style DIDs.

The DID specification can be found in the W3C Working Draft, Decentralized Identifiers (DIDs) v1.0.

Zero-Knowledge Proof

A zero-knowledge proof (ZKP) is about proving attributes about an entity (a person, organization or thing) without exposing a correlatable identifier about that entity. Formally, it’s about presenting claims from verifiable credentials without exposing the key (and hence a unique identifier) of the proving party to the verifier. A ZKP still exposes the data asked for (which could uniquely identify the prover), but does so in a way that shows the prover possesses the issued verifiable credential while preventing multiple verifiers from correlating the prover’s identity. Indy, based on underlying Ursa cryptography, implements ZKP support.

Selective Disclosure

The Indy ZKP model enables some additional capabilities beyond most non-ZKP implementations. Specifically, that claims from verifiable credentials can be selectively disclosed, meaning that just some data elements from credentials, even across credentials can (and should be) provided in a single presentation. By providing them in a single presentation, the verifier knows that all of the credentials were issued to the same entity. In addition, a piece of cryptography magic that is part of ZKP (that we won’t detail here—but it’s fascinating) allows proving pieces of information without presenting the underlying data. For example, proving a person is older than a certain age based on their date of birth, but without providing their date of birth to the verifier. Very cool!

An Example of Selective Disclosure

Person is verified as “old enough” to enter the bar but no other data is revealed

By Peter Stokyo

Licensed under CC BY 4.0

Wallet

DIDs and their keys are stored in an identity wallet. Identity wallets are like cryptocurrency wallets, but store different kinds of data—DIDs, credentials and metadata to use them. You can find more info on GitHub. The indy-sdk includes a default implementation of a wallet that works out of the box. A wallet is the software that processes verifiable credentials and DIDs.

Agent

Indy, Aries and Ursa use the term agent to mean the software that interacts with other entities (via DIDs and more). For example, a person might have a mobile agent app on their smart device, while an organization might have an enterprise agent running on an enterprise server, perhaps in the cloud. All agents (with rare exceptions) have secure storage for securing identity-related data including DIDs, keys and verifiable credentials. As well, all agents are controlled to some degree by their owner, sometimes with direct control (and comparable high trust) and sometimes with minimal control, and far less trust.

Summary

This chapter has largely been a review of the concepts introduced in the previous course. Its purpose is to provide context for why you want to become an Aries developer and recaps some of the terminology and concepts behind decentralized identity solutions that were discussed in the prerequisite course: LFS172x – Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries and Ursa.

Chapter 2: Exploring Aries and Aries Agents

Chapter Overview

As you learned in the prerequisite course (LFS172x – Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries and Ursa)—which you took, right?—Hyperledger Aries is a toolkit for building solutions focused on creating, transmitting, storing and using verifiable credentials. Aries agents are software components that act on behalf of (“as agents of”) entities—people, organizations and things. They enable decentralized, self-sovereign identity based on a secure, peer-to-peer communications channel. In fact, the main reason for using an Aries agent is to exchange verifiable credentials!

In this chapter, we’ll look at the architecture of an Aries agent. Specifically, what parts of an agent come from Aries, and what parts you are going to have to build. We will also look at the the interfaces that exist to allow Aries agents to talk to one another and to public ledgers such as instances of Hyperledger Indy.

Learning Objectives

By the end of this chapter you should:

- Be familiar with the Aries ecosystem consisting of edge agents for people and organizations, cloud agents for enterprises and device agents for IoT devices.

- Know the concepts behind issuing and verifying agents.

- Understand the internal components of an Aries agent.

Examples of Aries Agents

Let’s first look at a couple of examples to remind us what Aries agents can do. We’ll also use this as a chance to introduce some characters that the Aries community has adopted for many Aries proof-of-concept implementations. You first met these characters in the LFS172x course.

- Alice is a person who has a mobile Aries agent on her smartphone. She uses it to receive credentials from various entities, and uses those credentials to prove things about herself online.

- Alice’s smartphone app connects with a cloud-based Aries agent that routes messages to her. It too is Alice’s agent, but it’s one that is (most likely) run by a vendor. We’ll learn more about these cloud services when we get to the Aries mobile agents chapter.

- Alice is a graduate of Faber College (of Animal House fame), where the school slogan is “Knowledge is Good.” (We think the slogan should really be “Zero Knowledge is Good.”) Faber College has an Aries agent that issues verifiable credentials to the college’s students.

- Faber also has agents that verify presentations of claims from students and staff at the college to enable access to resources. For example, Alice proves the claims from her “I graduated from Faber College” credential to get a discount at the Faber College Bookstore whenever she is on campus—or when she shops there online.

- Faber also has an Aries agent that receives, holds and proves claims from credentials about the college itself. For example, Faber’s agent might hold a credential that it is authorized to grant degrees to its graduates from the jurisdiction (perhaps the US state) in which it is registered.

- ACME is a company for whom Alice wants to work. As part of their application process, ACME’s Aries agent requests proof of Alice’s educational qualifications. Alice’s Aries agent can provide proof using the credential issued by Faber to Alice.

- Since Alice is the first Faber College student to ever apply to ACME, ACME doesn’t know if they can trust Faber. An ACME agent might connect to Faber’s agent (using the DID in the verifiable credential that Alice presented) to get proof that Faber is a credentialed academic institution.

Lab: Issuing, Holding, Proving and Verifying

In this first lab, we’ll walk through the interactions of three Aries agents:

- A mobile agent to hold a credential and prove claims from that credential.

- An issuing agent.

- A verifying agent.

The instructions for running the lab can be found on GitHub.

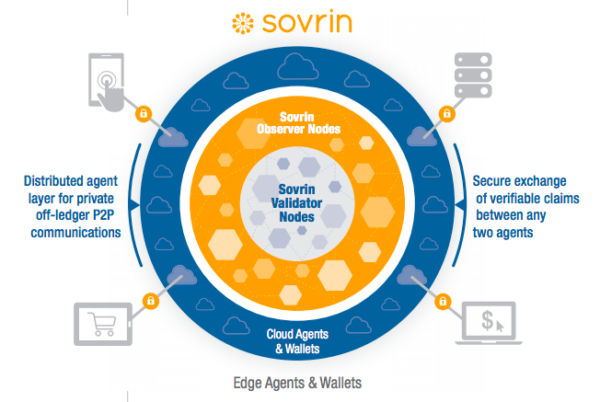

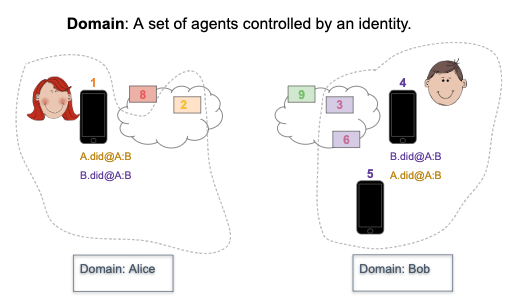

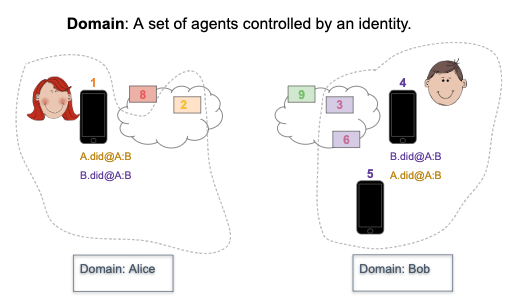

An Aries Ecosystem

All of the examples of Aries agents in the previous section can be built independently by different organizations because of the common protocols upon which Aries agents communicate. This classic Indy ecosystem picture shows multiple agents—the four around the outside (on a phone, a tablet, a laptop and an enterprise server) are referred to as edge agents, and the ones in the blue circle are called cloud agents. In the centre is the blockchain—the public ledger on which the globally resolvable data that supports verifiable credentials reside.

The Aries Ecosystem

Licensed under CC BY 4.0

The agents in the picture share many attributes:

- They all have storage for keys.

- They all have some secure storage for other data related to their role as an agent.

- Each interacts with other agents using secure, peer-to-peer messaging protocols.

- They all have an associated mechanism to provide “business rules” to control the behavior of the agent:

– Often a person (via a user interface) for phone, tablet, laptop, etc.-based agents.

– Often a backend enterprise system for enterprise agents.

– For cloud agents the “rules” are often limited to the routing of messages to and from other agents.

While there can be many other agent setups, the picture above shows the most common ones:

- Edge agents for people.

- Edge agents for organizations.

- Cloud agents for routing messages between agents (although cloud agents could also be edge agents).

A significant emerging use case missing from that picture is agents embedded within or associated with IoT devices. In the common IoT case, IoT device agents are just variants of other edge agents, connected to the rest of the ecosystem through a cloud agent. All the same principles apply.

A bit misleading in the picture is the implication that edge agents connect to the public ledger through cloud agents. In fact, (almost) all agents connect directly to the ledger network. In this picture it’s the Sovrin ledger, but that could be any Indy network (e.g. a set of nodes running indy-node software) and, in future Aries agents, ledgers from other providers. Since most agents connect directly to ledgers, most embed ledger SDKs (e.g. indy-sdk) and make calls to the ledger SDK to interact with the ledger and other SDK controlled resources (e.g. secure storage). Super small IoT devices are an instance of an exception to that. Lacking compute/storage resources and/or connectivity, such devices might securely communicate with a cloud agent that in turn communicates with the ledger.

The (most common) purpose of cloud agents is to enable secure and privacy preserving routing of messages between edge agents. Rather than messages going directly from edge agent to edge agent, messages sent from edge agent to edge agent are routed through a sequence of cloud agents. Some of those cloud agents might be controlled by the sender, some by the receiver and others might be gateways owned by agent vendors (called “agencies”). In all cases, an edge agent tells routing agents “here’s how to send messages to me,” so a routing agent sending a message only has to know how to send a peer-to-peer message—a single hop in the message’s journey. While quite complicated, the protocols used by the agents largely take care of this complexity, and most developers don’t have to know much about it. We’ll cover more about routing in the mobile agents chapter of this course.

Note: You may have noticed many caveats in this section: “most common,” “commonly” and so on. That is because there are many small building blocks available in Aries and underlying components that can be combined in infinite ways. We recommend not worrying about the alternate use cases for now. Focus on understanding the common use cases (listed earlier) while remembering that other configurations are possible.

Note: While most Aries agents currently available only support Indy-based ledgers, the stated intention of the Aries project is to add support for other ledgers. In this course, we’ll focus mostly on the Aries code that is being used today, which is mostly based on Indy, but we’ll highlight where and how other ledgers can and are being integrated.

The Logical Components of an Aries Agent

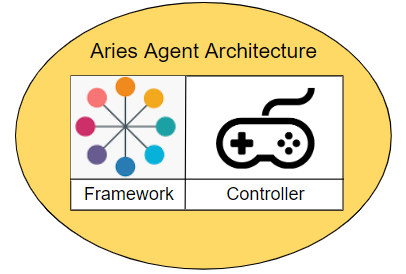

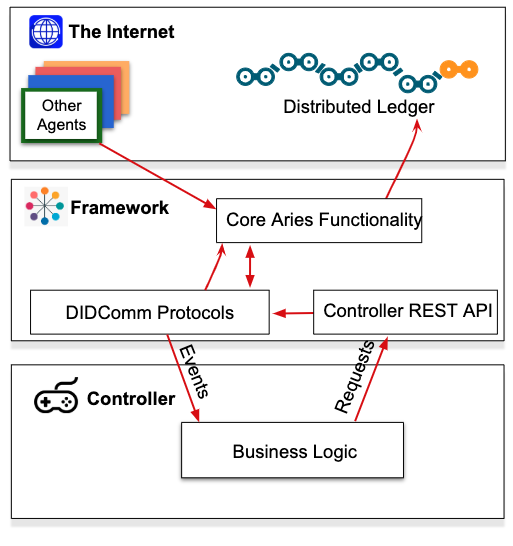

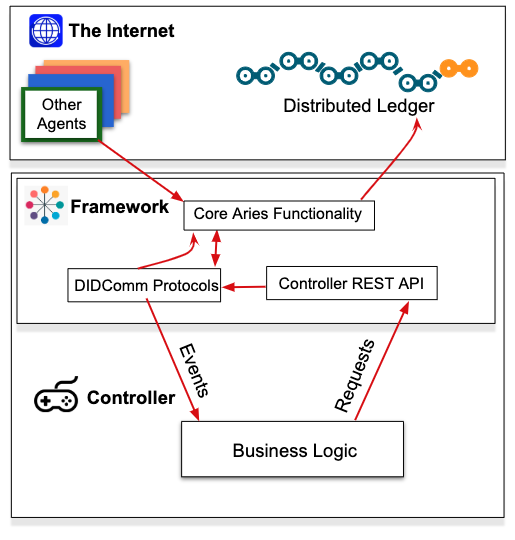

All Aries agent deployments have two logical components: a framework and a controller.

The Logical Components of an Aries Agent

Licensed under CC BY 4.0

The framework contains the standard capabilities that enable an Aries agent to interact with its surroundings—ledgers, storage and other agents. A framework is an artifact of an Aries project that you don’t have to create or maintain, you just embed in your solution. The framework knows how to initiate connections, respond to requests, send messages and more. However, a framework needs to be told when to initiate a connection. It doesn’t know what response should be sent to a given request. It just sits there until it’s told what to do.

The controller is the component that, well, controls, an instance of an Aries framework’s behavior—the business rules for that particular instance of an agent. The controller is the part of a deployment that you build to create an Aries agent that handles your use case for responding to requests from other agents, and for initiating requests. For example:

- In a mobile app, the controller is the user interface and how the person interacts with the user interface. As events come in, the user interface shows the person their options, and after input from the user, tells the framework how to respond to the event.

- An issuer, such as Faber College’s agent, has a controller that integrates agent capabilities (requesting proofs, verifying them and issuing credentials) with enterprise systems, such as a Student Information System that tracks students and their grades. When Faber’s agent is interacting with Alice’s, and Alice’s requests an “I am a Faber Graduate” credential, it’s the controller that figures out if Alice has earned such a credential, and if so, what claims should be put into the credential. The controller also directs the agent to issue the credential.

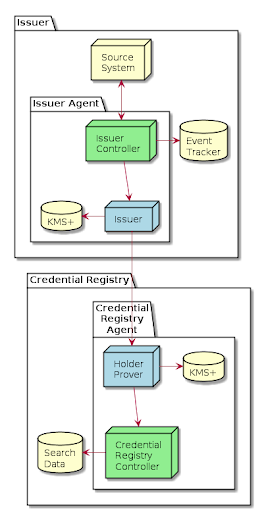

Aries Agent Architecture (ACA-PY)

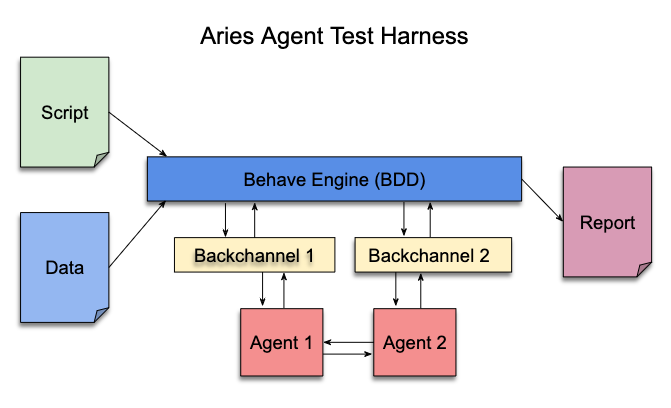

The diagram below is an example of an Aries agent architecture, as exemplified by Aries Cloud Agent – Python (ACA-Py):

Aries Agent Architecture (ACA-PY)

Licensed under CC BY 4.0

The framework provides all of the core Aries functionality such as interacting with other agents and the ledger, managing secure storage, sending event notifications to, and receiving instructions from the controller. The controller executes the business logic that defines how that particular agent instance behaves—how it responds to the events it receives, and when to initiate events. The controller might be a web or native user interface for a person or it might be coded business rules driven by an enterprise system.

Between the two is a pair of notification interfaces.

- When the framework receives a message (an event) from the outside world, it sends a notification about the event to the controller so the controller can decide what to do.

- In turn, the controller sends a notification to the framework to tell the framework how to respond to the event.

– The same controller-to-framework notification interface is used when the controller wants the framework to initiate an action, such as sending a message to another agent.

What that means for an Aries developer is that the framework you use is a complete dependency that you include in your application. You don’t have to build it yourself. It is the controller that gives your agent its unique personality. Thus, the vast majority of Aries developers focus on building controllers. Of course, since Aries frameworks are both evolving and open source, if your agent implementation needs a feature that is not in the framework you are building, you can do some Aries framework development and contribute it to Hyperledger.

Agent Terminology Confusion

In many places in the Aries community, the “agent framework” term we are using here is shortened to “agent.” That creates some confusion as you now can say “an Aries agent consists of an agent and a controller.” Uggghh… Throughout the course we have tried to make it very clear when we are talking about the whole agent versus just the agent framework. Often we will use the name of a framework to make it clear the context of the term. However, as a developer, you should be aware that in the community, the term “agent” is sometimes used just for the agent framework component and sometimes for the combined framework+controller.

Naming is hard… 🤦

Current Agent Frameworks

There are several Aries general purpose agent frameworks that are ready to go out of the box. The links to the repos are embedded.

- aries-cloudagent-python (ACA-Py) is suitable for all non-mobile agent applications and has production deployments. ACA-Py and a controller run together, communicating across an HTTP interface. Your controller can be written in any language and ACA-Py embeds the indy-sdk. At the time of writing this course, ACA-Py does not support any other ledgers or verifiable credential exchange models other than Hyperledger Indy.

- aries-framework-dotnet can be used for building mobile (via Xamarin) and server-side agents and has production deployments. The controller for an aries-framework-dotnet app can be written in any language that supports embedding the framework as a library in the controller. The framework embeds the indy-sdk.

- aries-ataticagent-python is a configurable agent that does not use persistent storage. To use it, keys are pre-configured and loaded into the agent at initialization time.

There are several other frameworks that are currently under active development, including the following.

- aries-framework-go is a pure golang framework that provides a similar architecture to ACA-Py, exposing an HTTP interface for its companion controller. The framework does not currently embed the Indy SDK and work on supporting a golang-based verifiable credentials implementation is in progress.

- aries-sdk-ruby is a Ruby-on-Rails agent framework with a comparable architecture to ACA-Py.

- aries-framework-javascript is a pure JavaScript framework that is intended to be the basis of React Native mobile agent implementations.

Different Aries frameworks implement the interfaces between the framework and controller differently. For example, in aries-cloudagent-python, the agent framework and the controller use HTTP interfaces to interact. In aries-framework-dotnet, the framework is a library, and the interfaces are within the process as calls and callbacks to/from the library.

Aries Agent Internals and Protocols

In this section, we’ll cover, at a high level, the internals of Aries agents and how Aries agent messaging protocols are handled.

The most basic function of an Aries agent is to enable (on behalf of its controlling entity) secure messaging with other agents. Here’s an overview of how that happens:

- Alice and Bob have running agents.

- Somehow (we’ll get to that) Alice’s agent discovers Bob’s agent and sends it an invitation (yes, we’ll get to that too!) to connect.

– The invitation is in plaintext (perhaps presented as a QR code) and includes information on how a message can be securely sent back to Alice. - Bob’s agent (after conferring with Bob—”Do you want to do this?” ) creates a private DID for the relationship and embeds that in a message to Alice’s agent with a request to connect.

– This message uses information in the invitation to securely send the message back to Alice’s agent. - Alice’s agent associates the message from Bob’s agent with the invitation that was sent to Bob and confers with Alice about what to do.

- Assuming Alice agrees, her agent stores Bob’s connection information, creates a private DID for the relationship, and sends a response message to Bob’s agent.

– Whoohoo! The agents are connected. - Using her agent, Alice can now send a message to Bob (via their agents, of course) and vice-versa. The messages use the newly established communication channel and so are both private and secure.

Lab: Agents Connecting

Let’s run through a live code example of two agents connecting and messaging one another. Follow this link to try it yourself and explore the code in the example.

Summary

This chapter focused on the Aries ecosystem (the way Aries is used in the real world), the Aries agent architecture (the components that make up an Aries agent), and how an Aries agent functions. We looked at examples of Aries agents, namely Alice and Faber College, and you stepped through a demo to verify, hold and issue verifiable credentials. We learned the difference between an edge agent and a cloud agent and common use cases for each. Next, we described the Aries agent architecture, discussing an agent framework, its controller and how the two work together. The main takeaway from this chapter is that as a developer, you will most likely be building your own controller, which will give your agent the business rules it needs to follow depending on your agent implementation.

You’ll notice in the example provided on the Aries Agents Internals and Protocols page, there was no mention of a public ledger. That’s right, Aries agents can provide a messaging interface without any ledger at all! Of course, since the main reason for using an Aries agent is to exchange verifiable credentials, and verifiable credential exchange requires a ledger, we’ll look at ledgers in the next section.

Chapter 3: Running a Network for Aries Development

Chapter Overview

In the last chapter, we learned all about the Aries agent architecture or the internals of an agent: the controller and framework. We also discussed common setups of agents and some basics about messaging protocols used for peer-to-peer communication. The lab in Chapter 2 demonstrated connecting two agents that didn’t use a ledger. Now that you’re comfortable with what an agent does and how it does it—and have seen agents at work off-ledger—let’s set the groundwork for using a ledger for your development needs.

Learning Objectives

In this chapter, we will describe:

- What you need to know and not know about ledgers in order to build an SSI application.

- How to get a local Indy network up and running, plus other options for getting started.

- The ledger’s genesis file—the file that contains the information necessary for an agent to connect to that ledger.

Ledgers: What You Don’t Need To Know

Many people come to the Indy and Aries communities thinking that because the projects are “based on blockchain,” that the most important thing to learn first is about the underlying blockchain. Even though their goal is to build an application for their use case, they dive into Indy, finding the guide on starting an Indy network and off they go—bumping their heads a few times on the way. Later, when they discover Aries and what they really need to do (build a controller, which is really, just an app with APIs), they discover they’ve wasted a lot of time.

Don’t get us wrong. The ledger is important, and the attributes (robustness, decentralized control, transaction speed) are all key factors in making sure that the ledger your application will use is sufficient. It’s just that as an Aries agent application developer, the details of the ledger are Someone Else’s Problem. There are three situations in which an organization producing self-sovereign identity solutions will need to know about ledgers:

- If your organization is going to operate a ledger node (for example, a steward on the Sovrin network), the operations team needs how to install and maintain that node. There is no development involved in that work, just operation of a product.

- If you are a developer that is going to contribute code to a ledger project (such as Indy) or interface to a ledger not yet supported by Aries, you need to know about the development details of that ledger.

- If you are building a product for a specific use case, the business team must select a ledger that is capable of supporting that use case. Although there is some technical knowledge required for that, there is no developer knowledge needed.

So, assuming you are here because you are building an application, the less time you spend on ledgers, the sooner you can get to work on developing an application. For now, skip diving into Indy and use the tools and techniques outlined here. We’ll also cover some additional details about integrating with ledgers in Chapter 8, Planning for Production, later in this course.

With that, we’ll get on with the minimum you have to know about ledgers to get started with Aries development. In the following, we assume you are building an application for running against a Hyperledger Indy ledger.

Running a Local Indy Network

The easiest way to get a local Indy network running is to use von-network, a pre-packaged Indy network built by the Government of British Columbia’s (BC) VON team. In addition to providing a way to run a minimal four-node Indy network using docker containers with just two commands, von-network includes:

- A well maintained set of versioned Indy container images.

- A web interface allowing you to browse the transactions on the ledger.

- An API for accessing the network’s genesis file (see below).

- A web form for registering DIDs on the network.

- Guidance for running a network in a cloud service such as Amazon Web Service or Digital Ocean.

The VON container images maintained by the BC Gov team are updated with each release of Indy, saving others the trouble of having to do that packaging themselves. A lab focused on running a VON network instance will be provided at the end of this chapter.

Or, Don’t Run a Network for Aries Development

What is easier than running a local network with von-network? How about not running a local network at all.

Another way to do development with Indy is to use a public Indy network sandbox. With a sandbox network, each developer doesn’t run their own local network, they access one that is running remotely. With this approach, you can run your own, or even easier, use the BC Government’s BCovrin (pronounced “Be Sovereign”) networks (dev and test). As of writing this course, the networks are open to anyone to use and are pretty reliable (although no guarantees!). They are rarely reset, so even long lasting tests can use a BCovrin network.

An important thing that a developer needs to know about using a public sandbox network is to make sure you create unique credential definitions on every run by making sure issuer DIDs are different on every run. To get into the weeds a little:

- Indy DIDs are created from a seed, an arbitrary 32-character string. A given seed passed to Indy for creating a DID will always return the same DID, public key and private key.

- Historically, Indy/Aries developers have configured their apps to use the same seeds in development so that the resulting DIDs are the same every time. This works if you restart (delete and start fresh) the ledger and your agent storage on every run, but causes problems when using a long lasting ledger.

– Specifically, a duplicate credential definition (same DID, name and version) to one already on a ledger will fail to be written to the ledger. - The best solution is to configure your app so a randomly generated seed is used in development such that the issuer’s DID is unique on every run so that the credential definition name and version can remain the same on every run.

Note: This is important for development. We’ll talk about some issues related to going to production in Chapter 8, Planning for Production, where the problem is reversed—we MUST use the same DID and credential definition every time we start an issuer agent.

In the labs in this course, you will see examples of development agents running against both local and remote sandbox Indy networks.

Proof of Concept Networks

When you get to the point of releasing a proof of concept (PoC) application “into the wild” for multiple users to try, you will want to use an Indy network that all of your PoC participants can access. As well, you will want that environment to be stable such that it is always available when it’s needed. We all know about how mean the Demo Gods can be!

Some of the factors related to production applications (covered in Chapter 8, Planning for Production) will be important for a PoC. For such a test, a locally running network is not viable and you must run a publicly accessible network. For that, you have three choices:

- The BCovrin sandbox test network is available for such long term testing.

- The Sovrin Foundation, operates two non-production networks:

– Builder Net: For active development of your solution.

– Staging Net: For proofs of concept, demos, and other non-production but stable use cases.

Note: Non-production Sovrin networks are permissioned, which means that you have to do a bit more to use those. We’ll cover a bit in the next section of this chapter and in Chapter 8, Planning for Production about getting ready for production deployments. - You may choose to run your own network on something like Amazon Web Service or Azure. Basically, you run your own version of “BCoverin”.

Running your own network gives you the most control (and is pretty easy if you use von-network), so that might be the preferred option. However, if you need to interoperate with agents from other vendors, the public ledger you choose must be one that is supported by all agents. That often makes either or both of the BCovrin and Sovrin Foundation networks as the best, and perhaps only, choice.

Audit Access Expires Apr 24, 2020 You lose all access to this course, including your progress, on Apr 24, 2020. Upgrade by Mar 27, 2020 to get unlimited access to the course as long as it exists on the site. Upgrade nowto retain access past Apr 24, 2020 The Indy Genesis File

In working with an Indy network, the ledger’s genesis file contains the information necessary for an agent to connect to that ledger. Developers new to Indy, particularly those that try to run their own network, often have trouble with the genesis file, so we’ll cover it here.

The genesis file contains information about the physical endpoints (IP addresses and ports) for some or all of the nodes in the ledger pool, and the cryptographic material necessary to communicate with those nodes. Each genesis file is unique to its associated ledger, and must be available to an agent that wants to connect to the ledger. It is called the genesis file because it has information about the genesis (first) transactions on the ledger. Recall that a core concept of blockchain is that the blocks of the chain are cryptographically tied to all the blocks that came before it, right back to the first (genesis) block on the chain.

The genesis file for Indy sandbox ledgers is (usually) identical, with the exception of the endpoints for the ledger—the endpoints must match where the nodes of the ledger are physically running. The cryptographic material is the same for sandbox ledgers because the genesis transactions are all the same. Those transactions:

- Create a trustee endorser DID on the ledger that has full write permission on the ledger.

- Permission the nodes of the ledger to process transactions.

Thus, if you get the genesis file for a ledger and you know the “magic” seed for the DID of the trustee (the identity owner entrusted with specific identity control responsibilities by another identity owner or with specific governance responsibilities by a governance framework), you can access and write to that ledger. That’s great for development and it makes deploying proof-of-concepts easy. Configurations like von-network take advantage of that consistency, using the “magic” seed to bootstrap the agent. For agents that need to write to the network (at least credential issuers, and possibly others), there is usually an agent provisioning step where the endorser DID is used to write a DID for the issuer that has sufficient write capabilities to do whatever else it needs to do. This is possible because the seed used to create the endorser DID is well known. In case you are wondering, the magic seed is:

000000000000000000000000Trustee1

For information about this, see this great answer on Stack Overflow about where it comes from.

As we’ll see in Chapter 8, Preparing for Production, the steps are conceptually the same when you go to production—use a transaction endorser that has network write permissions to create your DID. However, you’ll need to take additional steps when using a production ledger (such as the Sovrin Foundation’s Main Net) because you won’t know the seed of any endorsers on the network. Connecting to a production ledger is just as easy—you get the network’s genesis file and pass that to your agent. However, being able to write to the network is more complicated because you don’t know the “magic DID” that enables full write access.

By the way, the typical problems that developers have with genesis files is either they try to run an agent without a genesis file, or they use a default genesis file that has not had the endpoints updated to match the location of the nodes in your network.

Genesis File Handling in Aries Frameworks

Most Aries frameworks make it easy to pass to the agent the genesis file for the network to which it will connect. For example, we’ll see from the labs in the next chapter that to connect an instance of an ACA-Py agent to a ledger you use command line parameters to specify either a file name for a local copy of the genesis file, or a URL that can is resolved to fetch the genesis file. The latter is often used by developers that use the VON Network because each VON Network instance has a web server deployed with the network and provides the URL for the network’s genesis file. The file is always generated after deployment, so the endpoints in the file are always accurate for that network.

Lab: Running a VON Network Instance

Please follow this link to run a lab in which you will start a VON Network, browse the ledger, look at the genesis file and create a DID.

Summary

The main point of this chapter is to get you started in the right spot: you don’t need to dig deep into the ledger in order to develop SSI applications. You should now be aware of the options running an Indy network and know the importance of the genesis file for your particular network.

In the last chapter, we covered the architecture of an agent and demonstrated connecting two agents that didn’t use a ledger. In this chapter, we covered running a ledger. So, in the next chapter, let’s combine the two and look at running agents that connect to a ledger.

Chapter 4: Developing Aries Controllers

Chapter Overview

In the second chapter, we ran two simple command line agents that connected and communicated with one another. In the third chapter, we went over running a local ledger. In this chapter, we’ll go into details about how you can build a controller by running agents that connect, communicate and use a ledger to exchange credentials. For developers wanting to build applications on top of Aries and Indy, this chapter is the core of what you need to know and is presented mostly as a series of labs.

We will learn about controllers by looking at aries-cloudagent-python (ACA-Py) in all the examples. Don’t worry, with ACA-Py, a controller (as we will see) can be written in any language, so if you aren’t a Python-er, you’ll be fine. However, ACA-Py is not suitable for mobile so we’ll leave that discussion until Chapter 7. In the last section of this chapter, we’ll talk briefly about the other current Aries open source frameworks.

Learning Objectives

In this chapter, you will learn:

- What an agent needs to know at startup.

- How protocols impact the structure and role of controllers.

- About the aries-cloudagent-python (ACA-Py) framework versus other Aries frameworks (such as aries-framework-dotnet and aries-framework-go).

The goal of this chapter is to have you build your own controller using the framework of your choice.

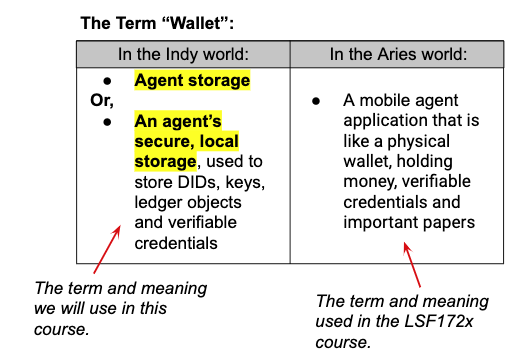

Aside: The Term “Wallet”

In the prerequisite course, LFS172x – Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries and Ursa, we talked about the term “wallet” as a mobile application that is like a physical wallet, holding money, verifiable credentials and important papers. This is the definition the Aries community would like to use. Unfortunately, the term has historically had a second meaning in the Indy community, and it’s a term that developers in Aries and Indy still see. In Indy, the term “wallet” is used for the storage part of an Indy agent, the place in the agent where DIDs, keys, ledger objects and credentials reside. That definition is still in common use in the source code that developers see in Indy and Aries.

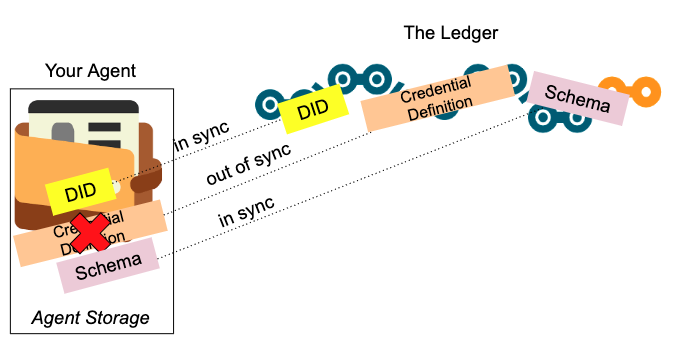

So, while the Indy use of the term wallet is being eliminated in Aries, because of the use of the existing Indy components in current Aries code (at least at the time of writing this course), we’re going to be using the Indy meaning for the term wallet—an agent’s secure, local storage. We’ll also use the term “agent storage” to mean the same thing.

“Wallet” in the Indy and Aries Worlds

Agent Start Up

An Aries agent needs to know a lot of configuration information as it starts up. For example, it needs to know:

- The location of the genesis file for the ledger it will use (if any).

- If it needs to create items (DIDs, schema, etc.) on the ledger, how to do that, or how to find them if they have already been created.

- Transport (such as HTTP or web sockets) endpoints for messaging other agents.

- Storage options for keys and other data.

- Interface details between the agent framework and the controller for events and requests.

These are concepts we’ve talked about earlier and that you should recognize. Need a reminder? Checkout the “Aries Agent Architecture” section of Chapter 2. We’ll talk about these and other configuration items in this chapter and the next.

Command Line Parameters

Most of the options for an agent are configured in starting up ACA-Py using command line options in the form of “–option <extra info>“. For example, to specify the name of the genesis file the agent is to use, the command line option is “–genesis-file <genesis-file>“. The number of startup options and the frequency with which new options are added are such that ACA-Py is self-documenting. A “–help” option is used to get the list of options for the version of ACA-Py you are using.

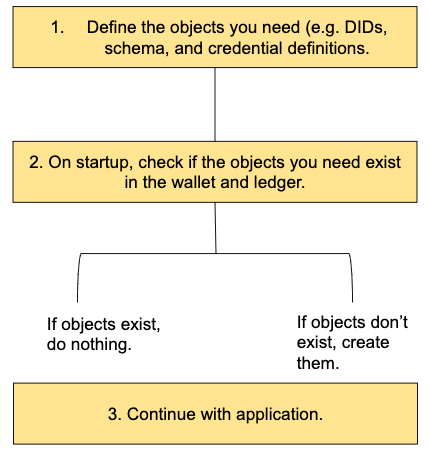

The provision and start Options

An agent is a stateful component that persists data in its wallet and to the ledger. When an agent starts up for the very first time, it has no persistent storage and so it must create a wallet and any ledger objects it will need to fulfill its role. When we’re developing an agent, we’ll do that over and over: start an agent, create its state, test it, stop it and delete it. However, when an agent is put into production, we only initialize its state once. We must be able to stop and restart it such that it finds its existing state, without having to recreate its wallet and all its contents from scratch.

Because of this requirement of a one time “start from scratch” and a many times “start with data,” ACA-Py provides two major modes of operation, provision and start. Provision is intended to be used one time per agent instance to establish a wallet and the required ledger objects. This mode may also be used later when something new needs to be added to the wallet and ledger, such as an issuer deciding to add a new type of credential they will be issuing. Start is used for normal operations and assumes that everything is in place in the wallet and ledger. If not, it should error and stop—an indicator that something is wrong.

The provision and start separation is done for security and ledger management reasons. Provisioning a new wallet often (depending on the technical environment) requires higher authority (e.g. root) database credentials. Likewise, creating objects on a ledger often requires the use of a DID with more access permissions. By separating out provisioning from normal operations, those higher authority credentials do not need to be available on an ongoing basis. As well, on a production ledger such as Sovrin, there is a cost to write to the ledger. You don’t want to be accidentally writing ledger objects as you scale up and down ACA-Py instances based on load. We’ve seen instances of that.

We recommend the pattern of having separate provisioning and operational applications, with the operational app treating the ledger as read-only. When initializing the operational app, it should verify that all the needed objects are available in the wallet and ledger, but should error and stop if they don’t exist. During development, we recommend using a script to make it easy to run the two steps in sequence whenever they start an environment from scratch (a fresh ledger and empty wallet).

The only possible exception to the “no writes in start mode” method is the handling of credential revocations, which involve ledger writes. However, that’s pretty deep into the weeds of credential management, so we won’t go further with that here.

Startup Option Groups

The ACA-Py startup options are divided into a number of groups, as outlined in the following:

- Debug: Options for development and debugging. Most (those prefixed with “auto-“) implement default controller behaviors so the agent can run without a separate controller. Several options enable extra logging around specific events, such as when establishing a connection with another agent.

- Admin: Options to configure the connection between ACA-Py and its controller, such as on what endpoint and port the controller should send requests. Important required parameters include if and how the ACA-Py/controller interface is secured.

- General: Options about extensions (external Python modules) that can be added to an ACA-Py instance and where non-Indy objects are stored (such as connections and protocol state objects).

- Ledger: Options that provide different ways for the agent to connect to a ledger.

- Logging: Options for setting the level of logging for the agent and to where the logging information is to be stored.

- Protocol: Options for special handling of several of the core protocols. We’ll be going into a deeper discussion of protocols in the next chapter.

- Transport: Options about the interfaces that are to be used for connections and messaging with other agents.

- Wallet: Options related to the storage of keys, DIDs, Indy ledger objects and credentials. This includes the type of database (e.g. SQLite or PostgreSQL) and credentials for accessing the database.

Note: While the naming and activation method of the options are specific to ACA-Py, few are exclusive to ACA-Py. Any agent, even those from other Aries frameworks, likely offer (or should offer) these options.

Lab: Agent Startup Options

Here is a short lab to show you how you can see all of the ACA-Py startup options.

The many ACA-Py startup options can be overwhelming. We’ll address that in this course by pointing out which options are used by the different sample agents with which we’ll be working.

How Aries Protocols Impact Controllers

Before we get into the internals of controllers, we need to talk about Aries protocols, introduced in Chapter 5 of the prerequisite course (LFS172x – Introduction to Hyperledger Sovereign Identity Blockchain Solutions: Indy, Aries and Ursa) and a subject we’ll cover much deeper in the next chapter. For now, we’ll cover just enough to understand how protocols impact the structure and role of controllers.

As noted earlier, Aries agents communicate with each other via a message mechanism called DIDComm (DID Communication). DIDComm uses DIDs (specifically private, pairwise DIDs—usually) to enable secure, asynchronous, end-to-end encrypted messaging between agents, with messages (usually) routed through some configuration of intermediary agents. Aries agents use (an early instance of) the did:peer DID method, which uses DIDs that are not published to a public ledger, but that are only shared privately between the communicating parties, usually just two agents.

The caveats in the above paragraph:

- An enterprise agent may use a public DID for all of its peer-to-peer messaging.

- Agents may directly message one another without any intermediate agents.

- The early version of did:peer does not support rotating keys of the DID.

Given the underlying secure messaging layer (routing and encryption are covered Chapter 7), Aries protocols are standard sequences of messages communicated on the messaging layer to accomplish a task. For example:

- The connection protocol (RFC 0160) enables two agents to establish a connection through a series of messages—an invitation, a connection request and a connection response.

- The issue credential protocol enables an agent to issue a credential to another agent.

- The present proof protocol enables an agent to request and receive a proof from another agent.

Each protocol has a specification that defines the protocol’s messages, a series of named states, one or more roles for the different participants, and a state machine that defines the state transitions triggered by the messages. For example, the following table shows the messages, roles and states for the connection protocol. Each participant in an instance of a protocol tracks the state based on the messages they’ve seen. Note that the states of the agents executing a protocol may not be the same at any given time. An agent’s state depends on the most recent message received or sent by/to that agent. The details of the components of all protocols are covered in Aries RFC 0003 (RFC 0003).

| Message | Role of Sender | State |

| invitation | inviter | invited |

| connectionRequest | invitee | requested |

| connectionResponse | inviter | connected |

In ACA-Py, the code for protocols is implemented as (possibly externalized) Python modules. All of the core modules are in the ACA-Py code base itself. External modules are not in the ACA-Py code base, but are written in such a way that they can be loaded by an ACA-Py instance at run time, allowing organizations to extend ACA-Py (and Aries) with their own protocols. Protocols implemented as external modules can be included (or not) in any given agent deployment. All protocol modules include:

- The definition of a state object for the protocol.

– The protocol state object gets saved in agent storage while an instance of a protocol is waiting for the next event. - The handlers for the protocol messages.

- The events sent to the controller on receipt of messages that are part of the protocol.

- Administrative messages that are available to the controller to inject business logic into the running of the protocol.

- The definition of a state object for the protocol.

Each administrative message (endpoint) becomes part of the HTTP API exposed by the agent instance, and is visible by the OpenAPI/Swagger generated user interface. More on that later in this chapter.

Aries Protocols: The Controller Perspective

We’ve defined all of the pieces of a protocol, and where the code lives in an Aries framework such as ACA-Py. Let’s take a look at a protocol from the controller’s perspective.

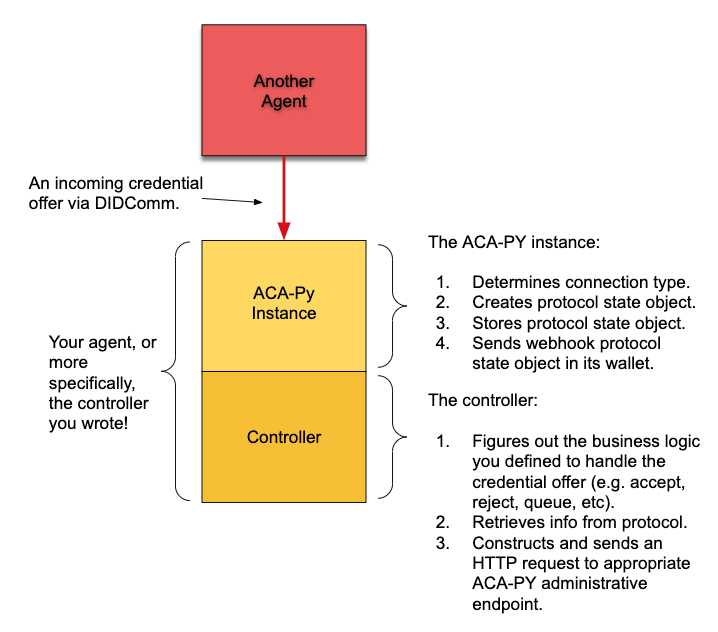

The agent has started up (an ACA-Py instance and its controller), and everything has been initialized. Some connections have been established but nothing much is going on. The controller is bored. Suddenly, a DIDComm message is received from another agent. It’s a credential offer message! We have to start a new instance of the “issue credential” protocol (RFC 0036). Here’s what happens:

- The ACA-Py instance determines what connection is involved and creates a protocol state object. It sets some data in the object and sends a webhook (HTTP request) to the controller with information about the message and the protocol state object.

– This assumes that the ACA-Py instance is NOT set up to “–auto-respond-credential-offer.” In that case, the ACA-Py instance would just handle the whole thing and the controller would just get the webhook, but not have to do anything. Sigh…back to being bored.

– Since the ACA-Py instance doesn’t know how long the controller will take to tell it what to do next, the ACA-Py instance saves the protocol state object in its wallet and moves on to do other things—like waiting for more agent messages. - The controller panics (OK, it doesn’t…). The controller code (that you wrote!) figures out what the rules are for handling credential offers. Perhaps it just accepts (or rejects) them. Perhaps it puts the offer into a queue for a legacy app to process and tell it what to do. Perhaps it opens up a GUI and waits for a person to tell it what to do.

– Depending on how long it will take to decide on the answer, the controller might persist the state of the in-flight protocol in a database. - When the controller decides (or is told) what to do with the credential offer, it retrieves (if necessary) the information about the in-flight protocol and constructs an HTTP request to send the answer to the appropriate ACA-Py administrative endpoint. In this example, the controller might use the “credential_request” endpoint to request the offered credential.

- The ACA-Py instance determines what connection is involved and creates a protocol state object. It sets some data in the object and sends a webhook (HTTP request) to the controller with information about the message and the protocol state object.

Aries Protocols in ACA-Py from the Controller’s Perspective

Licensed under CC BY 4.0

- Once it has sent the request to the ACA-Py instance, the controller might persist the data about the interaction and then lounge around for awhile, if it has nothing left to do.

- The ACA-Py instance receives the request from the controller and gets busy. It retrieves the corresponding protocol state object from its wallet and constructs and sends a properly crafted “credential request” message in a DIDComm message to the other agent, the one that sent the credential offer.

– The ACA-Py agent then saves the protocol state object in its wallet again as it has no idea how long it will take for the other agent to respond. Then it returns to waiting for more stuff to do.

That describes what happens when a protocol is triggered by a message from another agent. A controller might also decide it needs to initiate a protocol. In that case, the protocol starts with the controller sending an initial request to the appropriate endpoint of the ACA-Py instance’s HTTP API. In either case, the behavior of the controller is the same:

- Get notified of an event either by the ACA-Py instance (via a webhook) or perhaps by some non-Aries event from, for example, a legacy enterprise app.

- Figure out what to do with the event, possibly be asking some other service (or person).

– This might put the controller back to a waiting for an event state. - Send a response to the event to the ACA-Py instance via the administrative API.

In between those steps, the controller may need to save off the state of the transaction.

As we’ve discussed previously, developers building Aries agents for particular use cases focus on building controllers. As such, the event loop above describes the work of a controller and the code to be developed. If you have ever done any web development, that event loop is going to look very familiar! It’s exactly the same.

Aries agent controller developers must understand the protocols that they are going to use, including the events the controller will receive, and the protocol’s administrative messages exposed via the HTTP API. Then, they will write controllers that loop waiting for events, deciding what to do with the event, and responding.

And that’s it! We’ve covered the basics of protocols from the perspective of the controller. Let’s see controllers in action!

Lab: Alice Gets a Credential

In this section, we’ll start up two command line agents, much as we did in Chapter 2. However, this time, one of the participants, Faber College, will carry out the steps to become an issuer (including creating objects on the ledger), and issue a credential to the other participant, Alice. As a holder/prover, Alice must also connect to the ledger. Later, Faber will request a proof from Alice for claims from the credential, and Alice will oblige.

We’ll do this a couple more versions of this interaction in subsequent labs. There is not much content to see in this chapter of the course, but there is lots in the labs themselves. Don’t skip them!

Click here to access the lab.

Links to Code

There are a number of important things to highlight in the code from the previous lab—and in the ones to come. Make sure when you did the previous lab, that you followed the links to the key parts of the controller code. For example, in the previous lab, Alice and Faber ACA-Py agents were started, and it’s helpful to know for each what ACA-Py command line parameters were used. Several of the labs that will follow include a comparable list of links that you can use to inspect and understand the code.

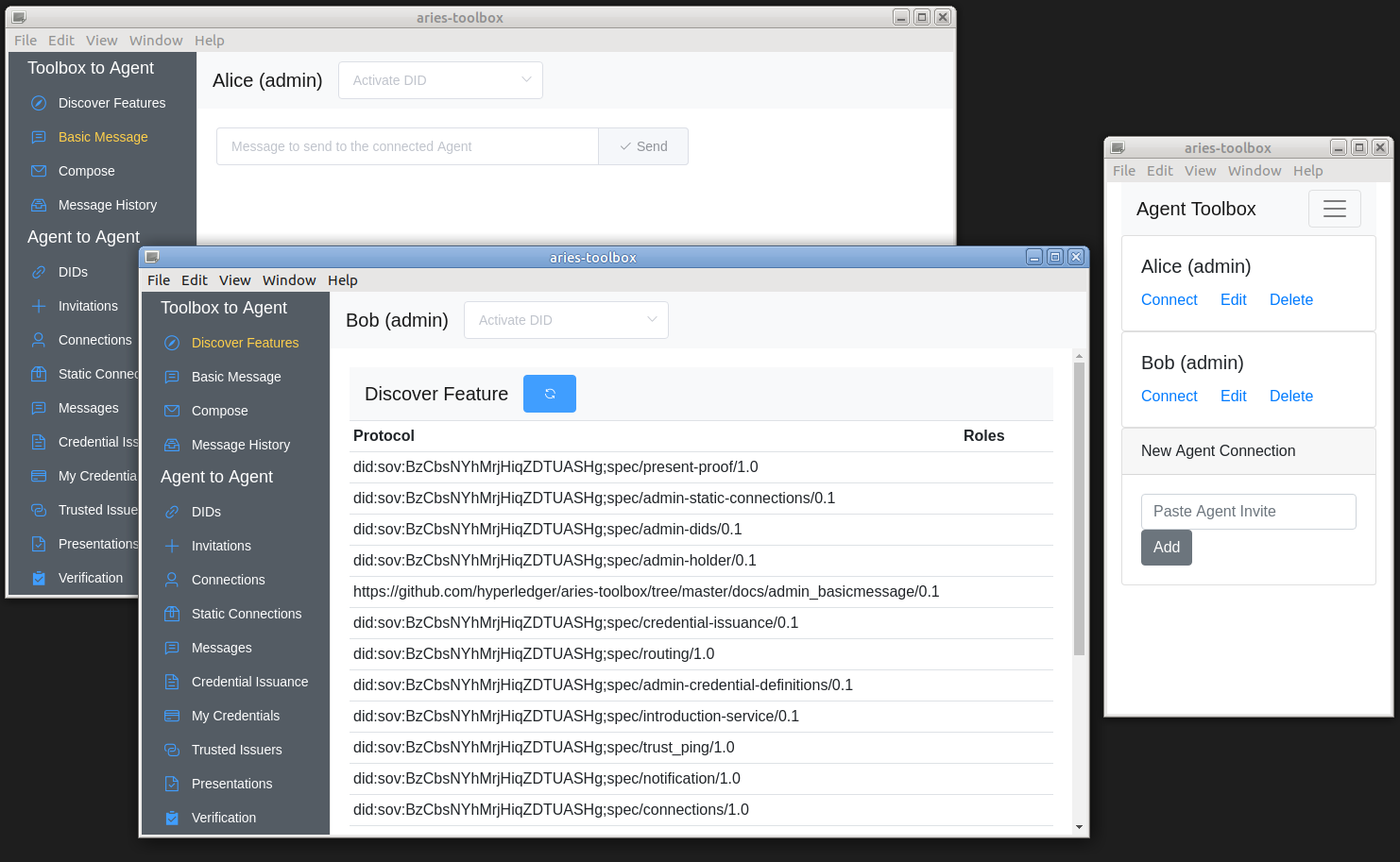

Learning the ACA-Py Controller API using OpenAPI (aka Swagger)

Now that you have seen some examples of a running controller, let’s get minimal. As noted earlier, ACA-Py has been implemented to expose an HTTP interface to the controller—the “Admin” interface. To make it easy for you to understand that HTTP interface, ACA-Py automatically generates an industry standard OpenAPI (also called Swagger) configuration. In turn, that provides a web page that you can use to see all the calls exposed by a running instance of ACA-Py, with examples and the ability to “try it”—that is, execute the available HTTP endpoints. Having an OpenAPI/Swagger definition for ACA-Py also lets you do cool things such as generate code (in your favorite language) to create a skeleton controller without any coding. If you are new to OpenAPI/Swagger, here’s a link to what it is and how you can use it. The most important use? Being able to quickly test something out in seconds just by spinning up an agent and using OpenAPI/Swagger.

With ACA-Py, the exposed API is dynamic for the running instance. If you start an instance of ACA-Py with one or more external Python modules loaded (using the “–plugin <module>” command line parameter), those modules must add administrative endpoints to the OpenAPI/Swagger definition so that they are visible in the OpenAPI/Swagger interface.

Lab: Using ACA-Py’s OpenAPI/Swagger Interface

In this lab, we’ll use the OpenAPI/Swagger interface to interact with ACA-Py instances so you can really start to understand how to write your own controller for ACA-Py that handles your specific use case. The only controller is the OpenAPI/Swagger user interface, and you will manually invoke the API calls in sequence (using the “try it” link) to go through the same Faber and Alice use case. It’s up to you to make sure Alice gets her credential!

Click here to run the OpenAPI/Swagger lab.

Lab: Help Alice Get a Job

Time to do a little development. The next assignment is to extend the command line lab with Alice and Faber to include ACME Corporation. Alice wants to apply for a job at ACME. As part of the application process, Alice needs to prove that she has a degree. Unfortunately, the person writing the ACME agent’s controller quit just after getting started building it. Your job is to finish building the controller, deploy the agent and then walk through the steps that Alice needs to do to prove she’s qualified to work at ACME.

Alice needs your help. Are you up for it? Click here to run the lab.

Lab: Python Not For You?

The last lab in this chapter provides examples of controllers written in other languages. GitHub user amanji (Akiff Manji) has taken the agents that are deployed in the command line version of the demo and written a graphical user interface (GUI) controller for each participant using a different language/tech stack. Specifically:

- Alice’s controller is written in Angular.

- Faber’s controller is written in C#/.NET.

- ACME’s controller is written in NodeJS/Express.

To run the lab, go to the instructions here.

As an aside, Akiff decided to make these controllers based on an issue posted in the ACA-Py repo. The issue was labelled “Help Wanted” and “Good First Issue.” If you are looking to contribute to some of the Aries projects, look in the repos for those labels on open issues. Contributions are always welcome!

Building Your Own Controller

Want to go further? This is optional, but we recommend doing this as an exercise to solidify your knowledge. Build your own “Alice” controller in the language of your choice. Use the pattern found in the two other Alice controllers (command line Python and Angular) and write your own. You might start by generating a skeleton using the OpenAPI/Swagger tools, or just by building the app from scratch. No link to a lab or an answer for this one. It’s all up to you!

If you build something cool, let us know by clicking here and submitting an issue. If you want, we might be able to help you package up your work and create a pull request (PR) to the aries-acapy-controllers repo.

Controllers for Other Frameworks

In this chapter we have focused on understanding controllers for aries-cloudagent-python (ACA-Py). The Aries project has several frameworks other than ACA-Py in various stages of development. In this section, we’ll briefly cover how controllers work with those frameworks.

aries-framework-dotnet

The most mature of the other Aries frameworks is aries-framework-dotnet, written in Microsoft’s open source C# language on the .NET development platform. The architecture for the controller and framework with aries-framework-dotnet is a little different from ACA-Py. Instead of embedding the framework in a web server, the framework is built into a library, and the library is embedded in an application that you create. That application is equivalent to an ACA-Py controller. As such, the equivalent to the HTTP API in ACA-Py is the API between the application and the embedded framework. This architecture is illustrated below.

Aries Agent Architecture (aries-framework-dotnet)

Licensed under CC BY 4.0

As we’ll see in the chapter on mobile agents, aries-framework-dotnet can be embedded in Xamarin and used as the basis of a mobile agent. It can also be used as a full enterprise agent, or as a more limited cloud routing agent. A jack-of-all-trades option!

aries-framework-go

The aries-framework-go takes the same approach to the controller/framework architecture as ACA-Py—an HTTP interface between the two. In fact, as the team building the framework has created the implementation, they have used the same administrative interface calls as ACA-Py. As such, a controller written for ACA-Py should work with an instance of aries-framework-go.

What’s unique about this framework is that it is written entirely in golang without any non-golang dependencies. That means that the framework can take advantage of the rich golang ecosystem and distribution mechanisms. That also means the framework does not embed the indy-sdk (libindy) and as such does not support connections to Indy ledgers or the Indy verifiable credentials exchange model. Instead, the team is building support for other ledgers and other verifiable credential models. It’s likely that support could (and may in the future) be added for Indy, but that might be at the cost of the “pure golang” benefits inherent in the current implementation.

Other Open Source Aries Frameworks

As we are creating this course, other Aries open source frameworks or Aries SDKs are being written in other languages and tech stacks. These include Ruby, Java and JavaScript. Check on the Aries Work Group wiki site to find what teams are building those capabilities, how they have structured their implementations, and how you can start building a controller on top of their work. Or how you can help them build out the frameworks.

Summary

We’ve covered an awful lot in this chapter! As we said at the beginning of the chapter, this is the core of the course, the hands-on part that will really get you understanding Aries development and how you can use it in your use cases. The rest of the course will be a lot lighter on labs, but we’ll refer back to the labs from this chapter to put those capabilities into a context with which you have experience.

Chapter 5. Digging Deeper—The Aries Protocols

Chapter Overview

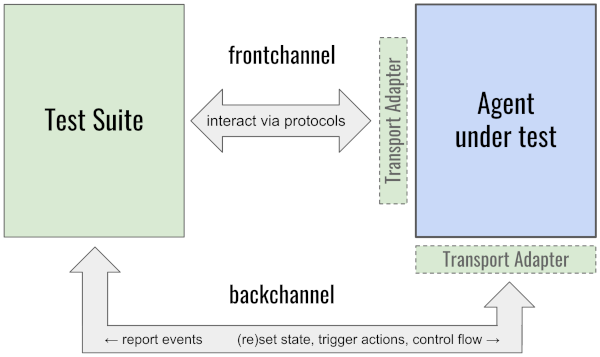

In the last chapter we focused on how a controller injects business logic to control the agent and make it carry out its intended use cases. In this chapter we’ll focus on what the controller is really controlling—the messages being exchanged by agents. We’ll look at the format of the messages and the range of messages that have been defined by the Aries Working Group. Later in the course, when we’re talking about mobile agents, we’ll look deeper into how messages get from one agent to another.

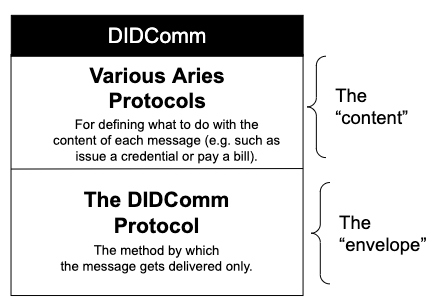

Some of the topics in this chapter come under the heading of “DIDComm Protocol,” where pairs (and perhaps in the future, groups) of agents connect and securely exchange messages on DIDs each has created and (usually) shared privately. The initial draft and implementations of the DIDComm protocol described here were incubated in the Hyperledger Indy Agent Working Group, and later the Aries Working Group. As this course is being written, some of the maintenance and evolution of DIDComm is being transferred from Aries to the DIDComm Working Group within the Decentralized Identity Foundation (DIF). This transfer is being done because Hyperledger is an open source code creating organization, not a standards creating body. Part of DIF’s charter is to define standards, making it a more suitable steward for this work. Of course, open source implementations of the standards will remain an important part of the Hyperledger Aries project.

Learning Objectives

As we’ve just explained, this chapter is all about messaging and the protocols that allow messaging to happen. We will discuss:

- The aries-rfcs repository—the home of all the documents!

- The two layers of Aries messaging protocols—or “DIDComm 101.”

- Which protocol layer a developer needs to worry about (hint: it’s the Aries protocols layer).

- The format of protocol messages.

- Message processing in general.